Amazon introduces AI Factories, a groundbreaking managed service that brings high-performance AI computing directly into customer data centers in partnership with Nvidia. This offering combines AWS-managed infrastructure with Nvidia’s advanced GPUs or Amazon’s Trainium3 accelerators, enabling organizations to train and run AI models on sensitive data without moving it off-premises. Customers retain full data sovereignty while accessing the same TensorFlow stack and tools used in the AWS cloud.

Core Components of AWS AI Factories Infrastructure

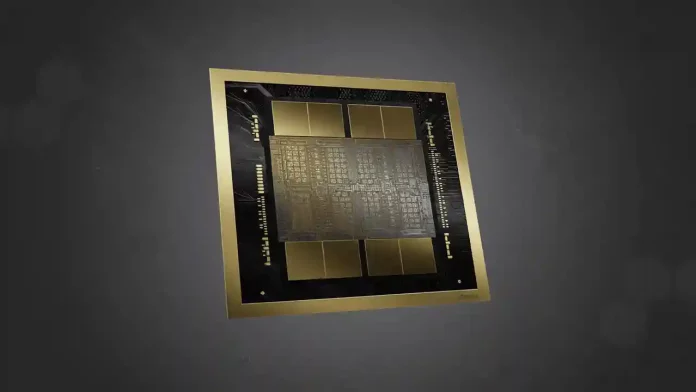

The service integrates Nvidia Blackwell-class GPUs or Amazon Trainium3 accelerators within AWS networking, storage, security, and operational frameworks. Customers provide power, space, and connectivity while AWS handles cluster deployment, management, and integration with services like Amazon Bedrock for model governance and SageMaker for development workflows. This creates a fully managed private supercomputer environment that maintains toolchain consistency across hybrid deployments.

Driving Forces Behind On-Premises AI Momentum

Regulatory compliance and data sovereignty requirements compel banks, healthcare providers, government agencies, and critical infrastructure operators to process sensitive data within controlled environments. GDPR, HIPAA, and national security policies prohibit data movement for many high-value workloads. Latency-critical applications like factory automation, medical imaging analysis, and real-time fraud detection benefit from co-locating inference directly with data sources, eliminating cloud round-trip delays and enabling continuous model improvement.

Competitive Landscape in Managed On-Premises AI

Microsoft offers Azure Local with Nvidia-powered infrastructure, Google provides Distributed Cloud and Anthos for hybrid orchestration, while Oracle targets regulated sectors through Alloy and dedicated regions. AWS differentiates by tightly coupling on-premises hardware with its comprehensive AI platform services, offering flexibility between Nvidia Blackwell GPUs and proprietary Trainium3 accelerators to address supply constraints and optimize workload portability across environments.

Performance and Cost Optimization with Nvidia and Trainium

Nvidia’s Blackwell architecture delivers substantial efficiency gains for large-scale training and high-throughput inference compared to previous generations. Amazon’s Trainium3 accelerators provide superior price-performance for specific workloads with long-term roadmap commitment. AWS contributes Nitro system virtualization, Elastic Fabric Adapter networking, managed storage, and comprehensive security services alongside Nvidia’s software ecosystem for seamless framework support and cluster management.

Power Infrastructure Challenges for AI Factory Deployments

AI Factories demand significantly higher power densities than traditional IT infrastructure, typically ranging from 30-60 kW per rack with flagship GPU configurations reaching even higher levels. This requires comprehensive upgrades to cooling systems, power distribution units, and structural floor loading capacity. Industry analysts identify rising rack densities as a primary challenge for data center operators, with AI clusters concentrating extreme power requirements in concentrated footprints.

Commercial and Operational Benefits for Enterprises

The managed service model transfers hardware lifecycle management, firmware updates, driver maintenance, and incident response responsibilities to AWS under SLA-backed guarantees. Flexible procurement options include reserved capacity, consumption-based billing, and multi-year commitments mirroring cloud economics. Enterprises achieve rapid time-to-value without building specialized internal teams for GPU integration, networking optimization, storage orchestration, and MLOps platform development.

Use Cases Driving AI Factory Adoption

Banks leverage proprietary transaction data for multilingual LLM fine-tuning, hospitals train diagnostic imaging models on governed datasets, and manufacturers deploy real-time vision systems where milliseconds determine operational efficiency. Government agencies process classified workloads without cross-border data transmission risks. Global enterprises establish regional AI hubs compliant with local data residency requirements while maintaining consistent tooling across jurisdictions.

Strategic Implications for Hybrid AI Architectures

AI Factories redefine hybrid cloud boundaries by extending AWS management capabilities to customer-controlled environments. This approach addresses escalating demands for both AI compute capacity and data control simultaneously. Organizations gain cloud operating model benefits—elastic scaling, managed services, global support—without compromising physical sovereignty or audit requirements. The service positions AWS as a flexible partner across the full spectrum of deployment preferences.

Future Trajectory of On-Premises AI Infrastructure

As AI workloads mature, hybrid architectures combining on-premises sovereignty with cloud elasticity become the dominant operating model. AI Factories enable seamless bursting to public cloud resources when needed while maintaining primary operations within controlled boundaries. This strategic flexibility addresses the dual pressures of performance requirements and compliance mandates, positioning early adopters for competitive advantage in AI-driven transformation.